Inference demand is accelerating at a breakneck pace, fueling both the expansion of data centers and the evolution of chip design to keep up with artificial intelligence-native applications and agentic models.

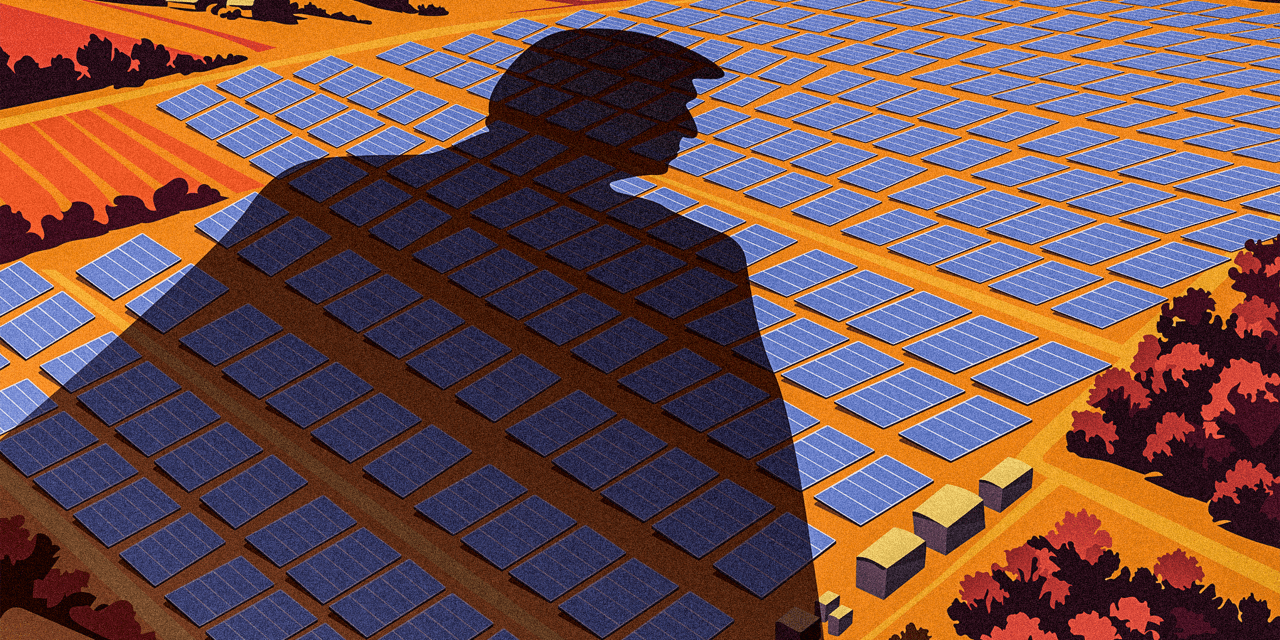

The rush to meet this demand highlights a new phase in computing, where speed and efficiency have become non-negotiable. Organizations are no longer satisfied with incremental performance gains; they are pushing for architectures that can handle reasoning models, code generation and adaptive agentic systems in real time. This surge is reshaping how companies think about data infrastructure, creating ripple effects across hardware design, software innovation and global investment in AI-ready facilities, according to Andrew Feldman (pictured), founder and chief executive officer of Cerebras Systems Inc.

Cerebras Systems’ Andrew Feldman spoke with theCUBE about inference demand during the AI Factories event.

“The willingness of people to wait 10, 20, 30 seconds, a minute, three minutes, nobody wants to sit and watch the little dial spin while they achieve nothing,” Feldman said. “By being able to deliver inference at these extraordinary rates, we found extraordinary demand.”

Feldman spoke with theCUBE’s John Furrier at theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio. They discussed the rapid growth in inference demand and the global expansion of data centers to meet AI demand.

Inference demand drives global data center expansion

The demand for AI compute continues to grow, signaling this is not a bubble but a sustained trend, according to Feldman. To meet that need, Cerebras just opened its fifth U.S. data center in Oklahoma City, Feldman noted.

“This is a 10-megawatt facility, and we immediately began expansion. We opened it, we expanded it,” he said. “We’re looking for data centers around the world.”

When leaders such as OpenAI Inc. and Anthropic PBC call for more compute, it highlights the scale of demand, according to Feldman. The same is true when it comes to leaders in the integrated development environment space pushing for greater capacity, according to Feldman.

“What you’re seeing is this sort of groundswell up out of the application and it’s washing over the makers of software and there is just this giant sucking sound,” he said. “That sucking sound means we need to deliver more compute to do more inference, that compute needs to live in more data centers, and it just washes back down the supply chain.”

During the AI Factories event, some argued that x86, GPUs and XPUs have long been interconnected in established ways. However, others view that as a naive and outdated approach.

“There are very little problems in this world where you can just add and connect for free,” Feldman emphasized. “When you break up a problem and you separate it, there’s a communication overhead. The probability of miscommunication jumps through the roof.”

When a problem is fractured and spread across thousands of GPUs, both the issues and complexity multiply rapidly. This is why only a handful of organizations in the world can train with 10,000 to 15,000 or more GPUs, according to Feldman.

“There may be a handful in the world. This is complexity that, at Cerebras, we seek to eliminate,” he added. “By building the largest chip in the industry, we keep more information, more data on chip, we move it less often, we use less power and we deliver answers in less time.”

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE’s coverage of theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event:

Photo: SiliconANGLE

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About SiliconANGLE Media

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.